At the time of writing, Paul Christiano's probability estimate for "irreversible catastrophe like human extinction" as a result of AGI misalignment is "around 10-20%". Will he publicly revise his P(AGI doom) upwards to over 50% before 2025?

Videos or forum posts of him stating so before 31/12/2025 PST will result in this market being resolved YES (if and only if the evidence can be shared publicly), otherwise the market will be resolved NO.

Source of quotation: https://www.lesswrong.com/posts/Hw26MrLuhGWH7kBLm/ai-alignment-is-distinct-from-its-near-term-applications

Feb 4, 3:25pm: Will Paul Christiano have updated his P(AGI doom) to over 50% by 2025? → By 2025, will Paul Christiano have updated his P(AGI doom) to over 50%?

@MartinRandall i will wait until he explicitly deems it to be "over 50%" or "more likely than not" to resolve this market in order to avoid displeasing people

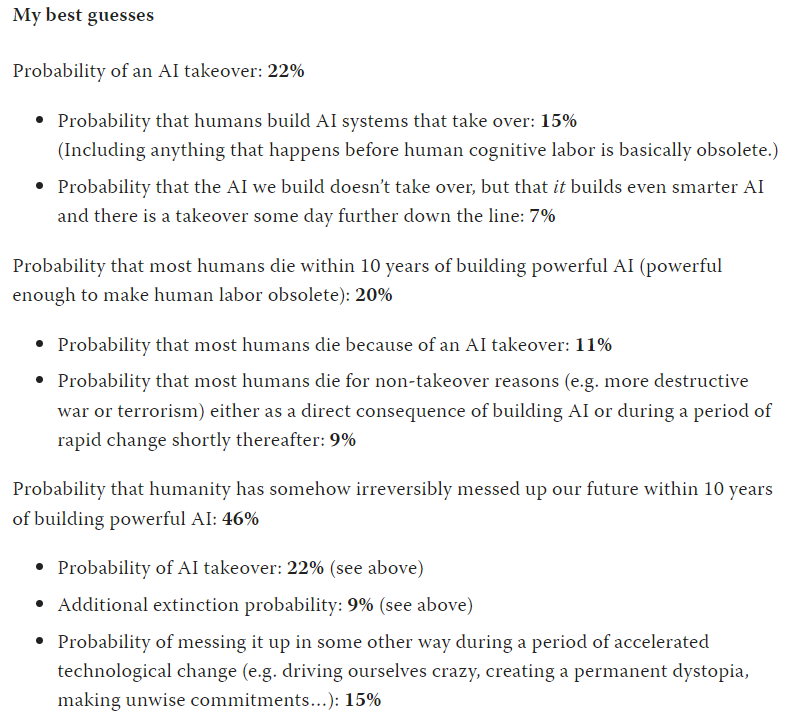

@MartinRandall the quote is, "I believe that AI takeover has a ~50% probability of killing billions..." Killing billions is not "irreversible catastrophe like human extinction," right? It's not even clear this is an update of Paul's view. Am I missing something?

@MartinRandall No, that says that an AI TAKEOVER has a 50% chance of killing billions. Not that our society has a 50% probability of AGI doom. The former is conditional on something, the latter is not.

@JacyAnthis Killing billions is an irreversible catastrophe for billions. Also, if an AI takes over and kills billions then we've lost control of the future and probably almost all the value relative to the possible.

I agree it's not a definitive quote. I put a limit YES order at 40% if you want to buy into it.

@MartinRandall I think the usual way these things are framed is that 1% of humanity surviving is not irreversible because civilization can be rebulilt.

@MartinRandall The 50% chance of killing billions is conditional on an AI takeover happening. He thinks the odds of an AI takeover is 22%, which means the odds of AI taking over & killing billions is 11%.

@MartinRandall I also think the 46% number in this image is not what is being asked about in this market. This market is asking about the event which is given 11% chance in that image, which is what is described as the "total risk" in the post linked in the criteria.

The full quote from the link from the criteria is

There are many different reasons that someone could care about this technical problem.

To me the single most important reason is that without AI alignment, AI systems are reasonably likely to cause an irreversible catastrophe like human extinction. I think most people can agree that this would be bad, though there’s a lot of reasonable debate about whether it’s likely. I believe the total risk is around 10–20%, which is high enough to obsess over.

It's a bit ambiguous, but I think the word "total" is meant to convey that the 10% describes the event that reflects both the consideration that alignment might or might not be solved and that AI might or might not kill us if alignment is not solved.

@EA42 Would you clarify which you meant?

@BoltonBailey I think, eg, "creating a permanent dystopia" would count as an "irreversible catastrophe like human extinction", and is explicitly not included in the 11% figure. It's a catastrophe because it's a dystopia and it's irreversible because it's permanent. If we do that after creating "powerful AI", then I think the powerful AIs were misaligned to humanity, because powerful AIs that were aligned to humanity would not choose to let that happen.

Similarly, if we build powerful AIs and then go extinct in a massive war prior to AI takeover, that counts as an "irreversible catastrophe like human extinction", because it is human extinction. That possibility is likewise explicitly not included in 11%.

I think "total risk" has to mean total, and therefore has to include all these possibilities for irreversible catastrophe, so I continue to see 46% as the latest data point for the market. Certainly I welcome clarification from @EA42 .

@MartinRandall It's worth noting also that the 46% number includes non-AI doom scenarios. Do you agree that this market is only about doom that comes from AI?

Edit: Sorry let me clarify that a bit. The 46% is for doom in a world with AI, but the third point (labeled 15%) seems to me to be communicating the risk of doom not coming from AI misalignment, as the market's criteria require.

@BoltonBailey It's a bit weird because the 22% point itself doesn't say that the AI takeover is explicitly bad, but it's referenced by the first subpoint under the 46% line which says "irreversibly messed up", so I guess that's how Christiano sees it. I could see my way to 22% + 9%, but I do still think the 15% doesn't count.

@BoltonBailey Given that we haven't created a permanent dystopia in the last X thousand years, if we create a permanent dystopia in the first ten years after creating powerful AI, I think that is 99.9% likely an "AI doom scenario", that wouldn't have happened without AI. Similarly for other ways the future might be irreversibly messed up.

For example, if Christiano gets hold of a powerful AI and uses it to become an evil omnipotent deity, then I would regard that as a "result of AI misalignment". That is included in the 15% category, since it isn't an AI takeover, and it isn't an extinction scenario.

The question description asks in part:

Will he publicly revise his P(AGI doom) upwards to over 50% before 2025?

I think the 15% scenarios are clearly included in P(AGI doom). I think it would be misleading if this market didn't include scenarios where we achieve "alignment" in some narrow, technical, and ultimately useless sense. It would also be misleading for anyone following this market trying to understand the risks faced by our species. I think the question title provides backing for my interpretation.

@MartinRandall I guess I can't argue with that. To continue my objections, maybe it's also the case that the 15% isn't considered "doom"/"irreversible catastrophe like human extinction" by the market crreator/Christiano.

It just seems suspicious to me that there is a number between 10%-20% in this image, it makes me think that this was the possibility Christiano was talking about in the link from the criteria.

Is there a link to the post that the image above comes from? Does he say anywhere that his beliefs have changed since the 10%-20% comment?

@BoltonBailey Here's the source of "my best guesses": https://www.lesswrong.com/posts/xWMqsvHapP3nwdSW8/my-views-on-doom

That's 2023-04-27, whereas the market started referencing a 2022-12-13 post.

Does he say anywhere that his beliefs have changed since the 10%-20% comment?

Yes, in that post he says:

A final source of confusion is that I give different numbers on different days. Sometimes that’s because I’ve considered new evidence, but normally it’s just because these numbers are just an imprecise quantification of my belief that changes from day to day. One day I might say 50%, the next I might say 66%, the next I might say 33%.

So I am betting YES in part because while he was at 46% on 2023-04-27, he's saying that his day-to-day beliefs might be higher, even as high as 66%. Personally that would be enough for me to resolve the market YES.

I don't fully understand what is in the mind of @VictorLJZ, who is due to resolve this market. I think my logic above is pretty sound and I hope that when Victor updates the market description it will reflect a "total risk" of "AGI doom" that policymakers care about, and not just a subset.

@VictorLi That says 46% "Probability that humanity has somehow irreversibly messed up our future within 10 years of building powerful AI: 46%"

@GregColbourn yes, but "AGI takeover" where the AI directly engages in adversarial optimization to kill off humanity intentionally is more what I mean by "AGI doom". I'll make update the description to be more explicit so sorry about that.

@pranav [new main acc of market maker here] im not sure what to make of this, earlier on he still says that his overall P(doom) is around 10-20% like before

@VictorLi Please could you update the bio on the original to indicate that it's your alt? This is the new alt policy on Manifold.