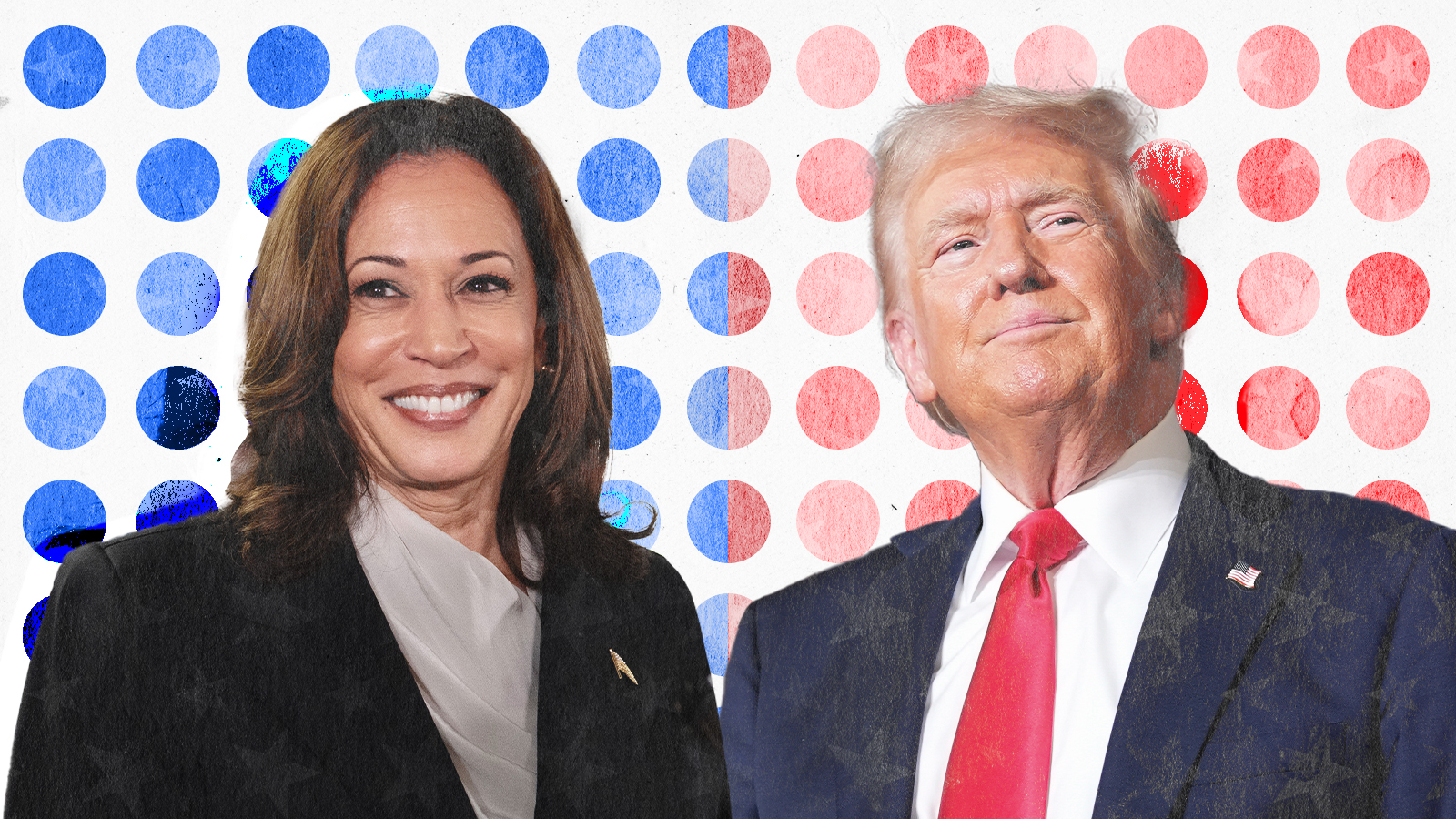

Specifically, this is about predicting which states end up voting for which presidential candidate.

(Default 538 model.)

How in the hell did it end up with 51%... Is this the the 538 model everybody is talking about? https://projects.fivethirtyeight.com/2024-election-forecast/ Did it get un-paywalled just recently, or something?

@StepanBakhmarin No it's not paywalled, but I think it's hard at this point to see what the probabilities were 1 week before the election, since it will now be showing the frozen model from the night before.

Are there specific Manifold markets you'll be looking at for each state? Say, the one with the most mana in it?

I'd like to know this too. I'm presuming it's who's closer to the EV count? But it could be states won who knows

On the contrary, I think that the fact that Manifold has access to 538 should generally make Yes probability go up. Even if it didn't quite pan out that way in 2020.

That said, I think Yes being at 65% or maybe 70%in the future is a bit overconfident

In what way do you think? I mean the model, not the actual predictions. I would agree that certain factors have thrown a wrench in the model, like it predicting that RFK is actually going to get a significant chunk of the vote.

@StarkLN https://stat.columbia.edu/~gelman/research/published/Harvard_Data_Science_Review.pdf

Bayesianism is not a good way to model tail events

@nikki I think I asked the wrong question. It would be better to ask: in what ways do Elliot's thinking majorly differ from Nate Silver's in a way that you think hurts the model. I didn't keep up with the Nate Silver Elliot Morris twitter stuff + it looks like most of it has been deleted by now.

@LarsDoucet I would advocate for "whatever the default model is displayed to a new visitor to the site", but would be nice to get that confirmed @IsaacKing.

@IsaacKing What probability would you say 538 had given for a state it predicted to be ">99%" likely to have a particular outcome?