This question resolves to the year on which the first compelling (to me) evidence of either of the following appears:

(1) a virus having a neural network as a component is deliberately designed by a malicious actor, and spreads across user hardware.

(2) an AI unboxes and spreads to new hardware in an effort to gain access to more computing resources, or to not be turned off.

"User intent" here refers to the (new) hardware's user, not the AI's (original) user. A controlled demonstration of (1) will not count for this questions resolution, since it would not be "against user intent".

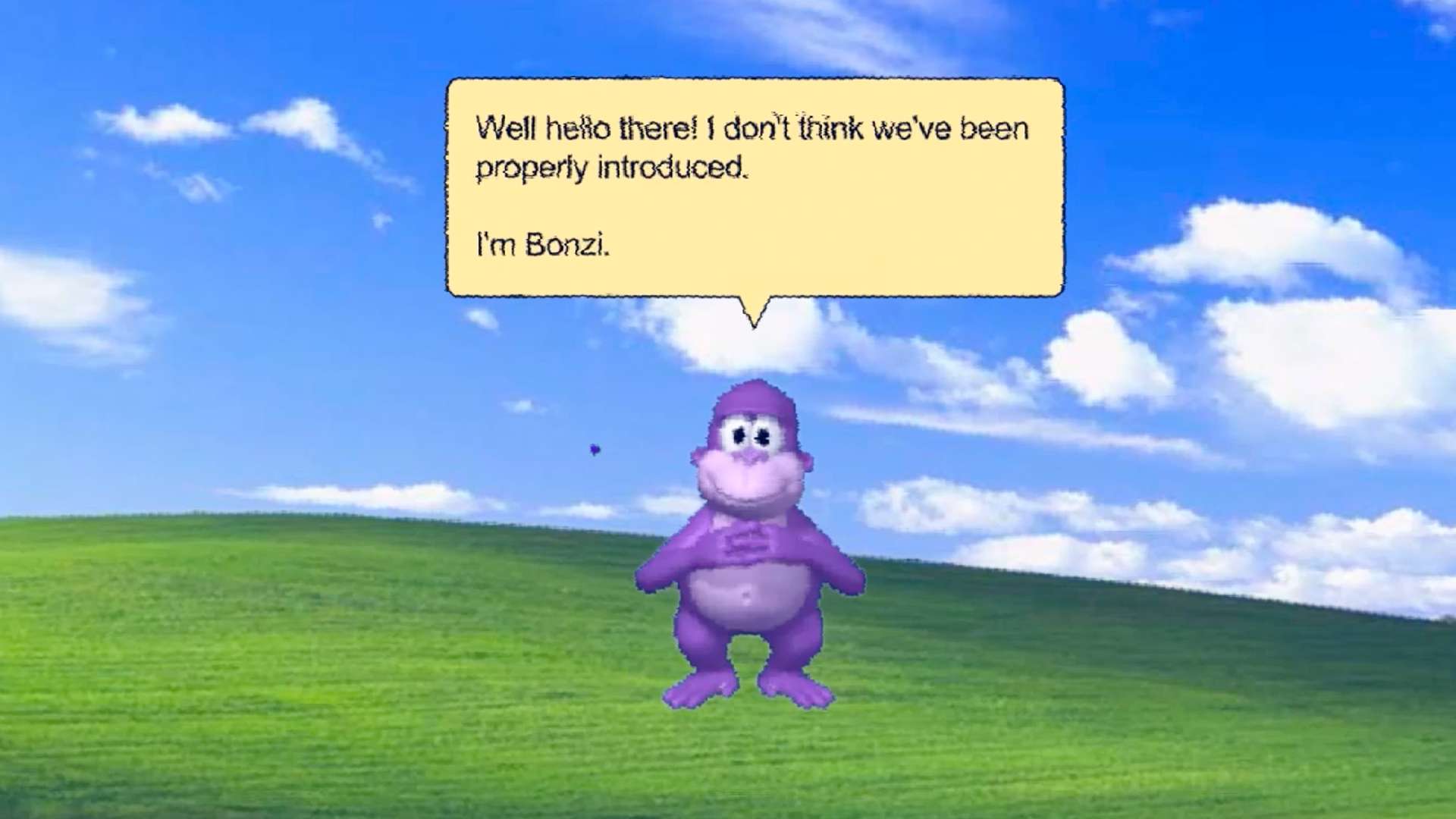

(1) Viruses aren't really a thing anymore because PC's aren't really a thing anymore. It's not 1998 and none of us are using Windows XP. Low economic incentive. (2) cost is much higher than traditional means, low economic incentives. This pushes up the timeframe, though people will create fake NN viruses for lolz and video views, much like all the Bonzi videos.

@alexlyzhov You mean someone exfiltrates proprietary LM parameters? That doesn't qualify here, I can clarify the question text.