ARIA's Safeguarded AI programme is based on davidad's programme thesis, an ambitious worldview about what could make extremely powerful AI go well. ARIA aims to disperse £59m (as of this writing) to accomplish these ambitions.

https://www.aria.org.uk/wp-content/uploads/2024/01/ARIA-Safeguarded-AI-Programme-Thesis-V1.pdf

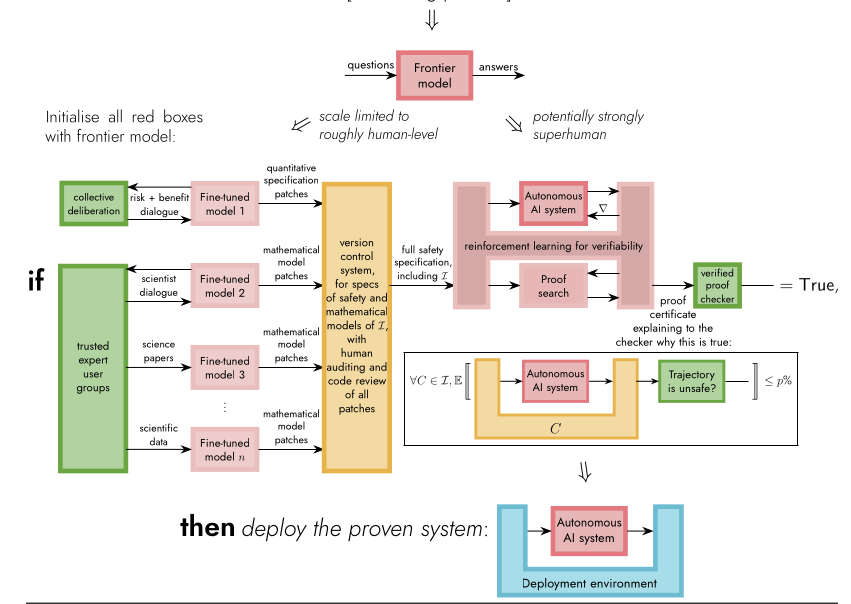

The Safeguarded AI agenda consists of world modeling and proof certificates to create gatekeepers, quantitative computations which simulate an action being taken and only pass it along to meatspace if it is safe up to a given threshold.

The Safeguarded AI programme is a sequence of three "technical areas" (TA1, TA2, TA3). TA1 began soliciting targets of the funding in April of 2024. When will TA2 solicit targets of funding?

Will resolve yes if ARIA opens a funding solicitation labeled TA2, even if TA2 undergoes substantial changes

TA2's goal is to demonstrate "that we can use frontier AI to help domain experts build best-in-class mathematical models of real-world complex dynamics with relevance to valuable applications, and leverage frontier AI to train autonomous systems that can be verified with reference to those models." Given that there are other projects pursuing this domain independently of the ARIA program, it seems quite likely that we'll have this goal achieved long before the TA2 solicitation even starts. So the bet seems to me quite orthogonal to the actual success of the overall safeguarded AI agenda. Is this something that needs to be resolved?