Will an LLM with less than 10B parameters beat GPT4 by EOY 2025?

Basic

15

Ṁ1812resolved Jan 10

Resolved

YES1D

1W

1M

ALL

How much juice is left in 10B parameters?

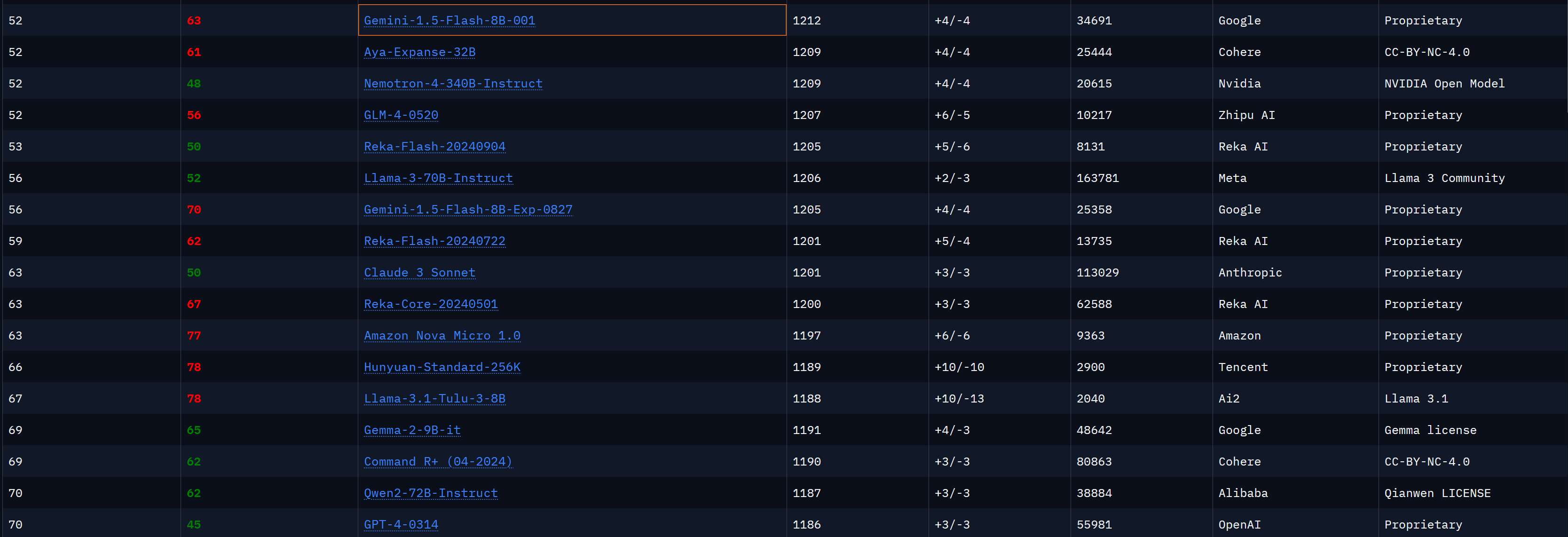

the original GPT4-0314 (ELO 1188)

judged by Lmsys Arena Leaderboard

current SoTA: Llama 3 8B instruct (1147)

This question is managed and resolved by Manifold.

Get 1,000and

1,000and 3.00

3.00

Sort by:

bought Ṁ500 YES

@mods Resolves as YES.. The creator's account is deleted, but Gemini 1.5 Flash-8B easily clears the bar (ELO higher than 1188) with an ELO of 1212, see https://lmarena.ai/

@singer There is a without refusal board on lmsys. The disparity between main and that is how much advantages not having censorship’s give u

Related questions

Related questions

Will the best LLM in 2025 have <1 trillion parameters?

42% chance

Will the best LLM in 2025 have <500 billion parameters?

17% chance

Will the best LLM in 2026 have <1 trillion parameters?

40% chance

Will the best LLM in 2027 have <1 trillion parameters?

26% chance

Size of smallest open-source LLM marching GPT 3.5's performance in 2025? (GB)

-

China will make a LLM approximately as good or better than GPT4 before 2025

89% chance

Will the best LLM in 2026 have <500 billion parameters?

27% chance

Will the best LLM in 2027 have <500 billion parameters?

13% chance

Will the best LLM in 2027 have <250 billion parameters?

12% chance