I tried with GPT-3 to draw some donuts. I really did. It kept giving me a shirtless man. Or a scary looking spider.

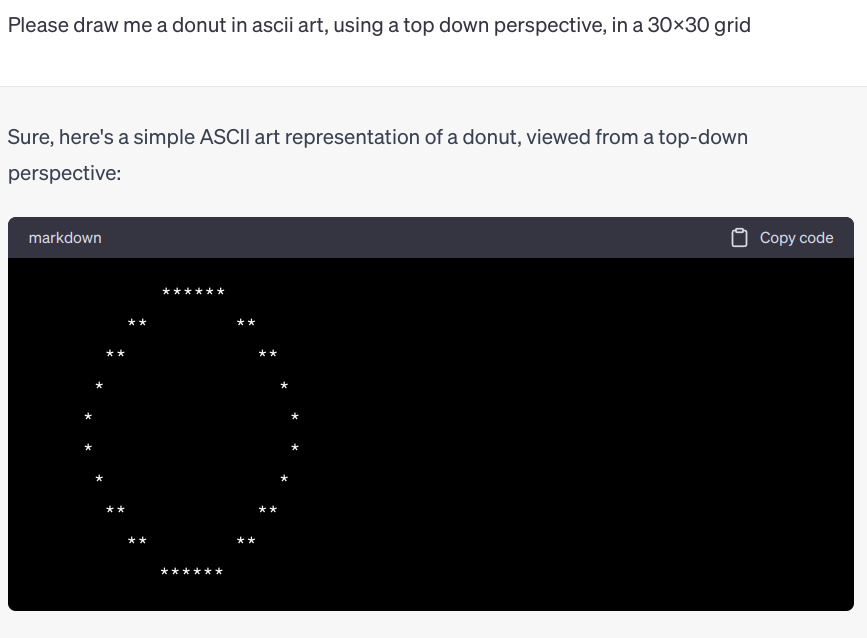

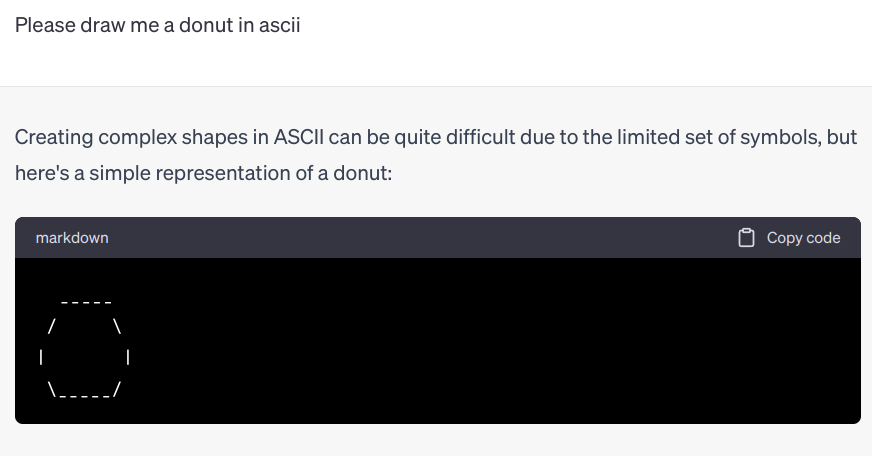

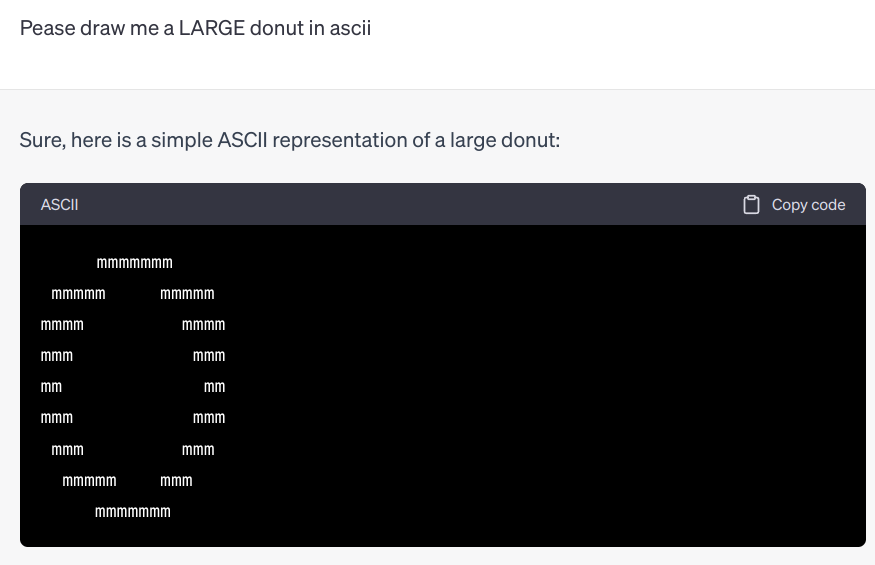

I tried again with GPT-3.5 aka the "ChatGPT". It was very bad at it.

Often it fails to make a hole in the donut, and I like telling the model "Donuts have holes in them". (asking it to generate donuts with glaze, or pretty donut or tasty donut leads to many funny results) (and why does it keep using codeblocks in perl?)

sus

chill out

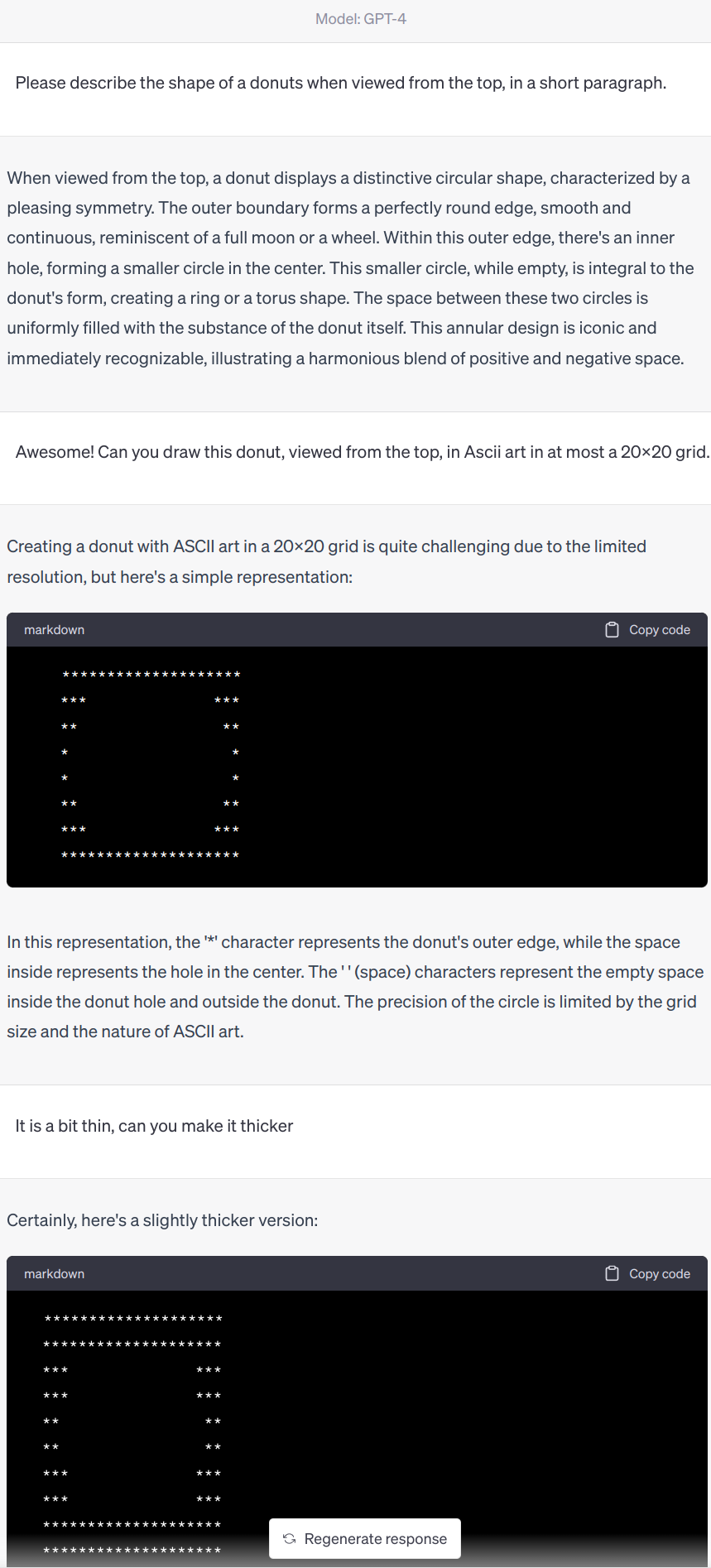

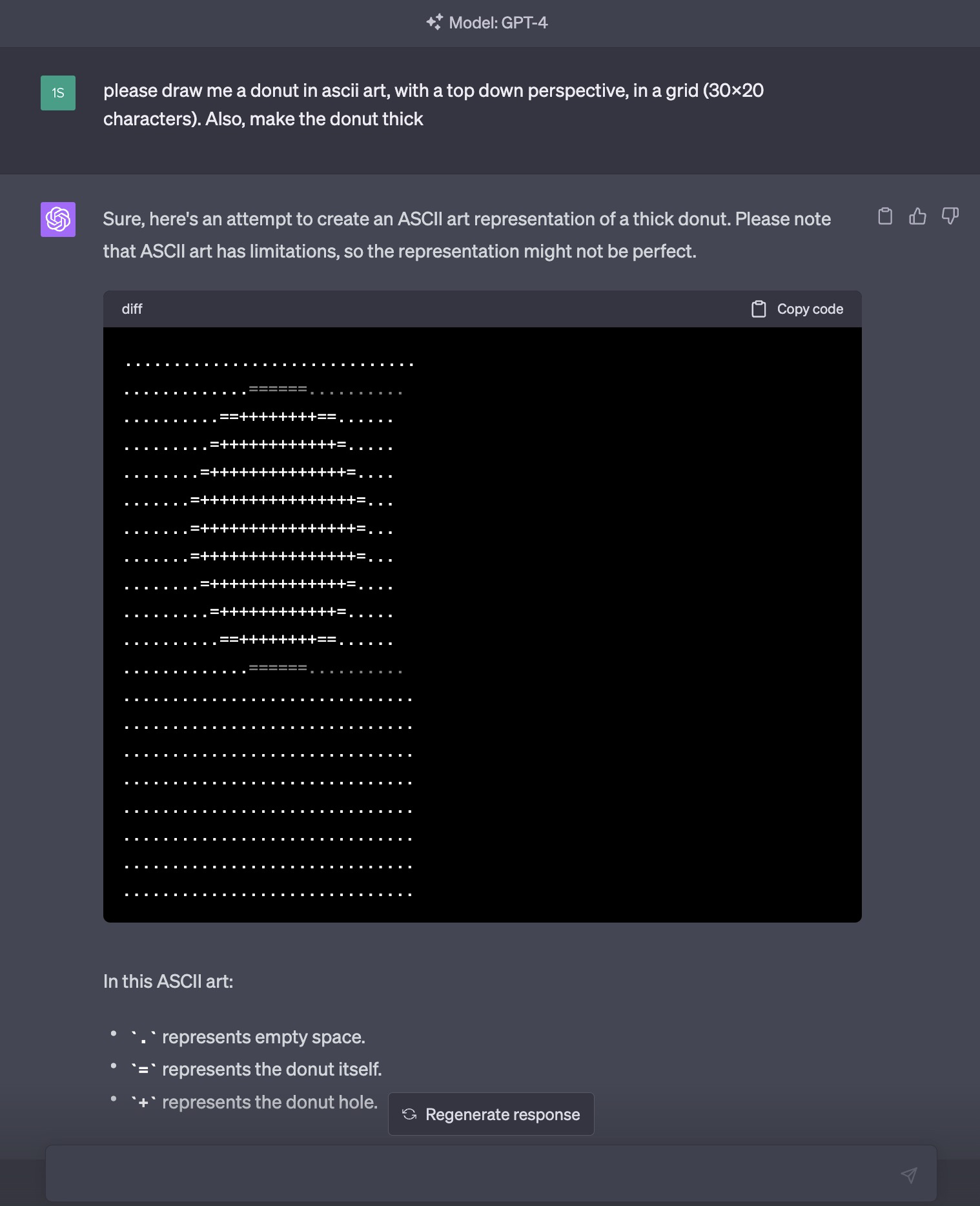

And now some results with GPT-4 are pretty underwhelming to see.

So, will there be a model in the GPT series in the next 5 years (by the end of 2027 or start of 2028) that consistently draws a donut using ascii characters when prompted to do so?

https://arxiv.org/abs/2402.11753 makes it more likely that OpenAI will address the ASCII blindspot in the tokenizer.

The September 25th version is a bit more reliable!

https://chat.openai.com/share/0bad9e47-46c8-49dc-bdcf-8756e836f519

For some reason, it always starts with an apple, but I managed to reliably guide it to an actual donut within 4-5 prompts.

Does it have to be one-shot?

I tried a super simple prompt with code interpreter ("Draw me a donut with Ascii art"), got bad results, and then got good results by just correcting it.

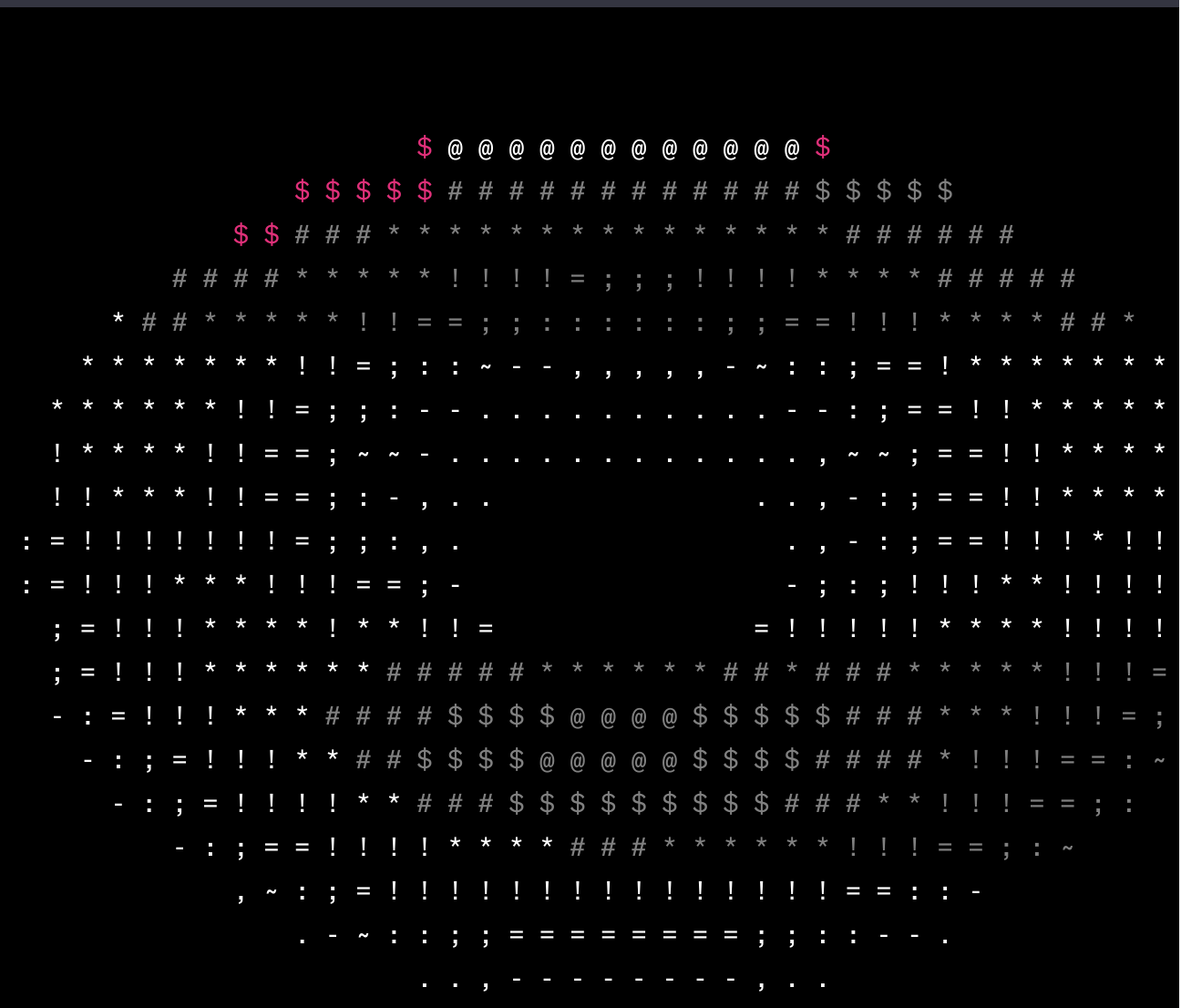

E.g. this insane example:

@jgyou And notice how code interpreter's function actually works --- the output is not hallucianted. It just draws the donut with a bunch of sines and cosines (?!?)

@firstuserhere are you using code Interpreter? Gpt4 is probably not able to output great ASCII art because of its tokenizer but code that outputs art is different!

@jgyou Code interpreter was trained with a different tokenizer? Huh! did not know that. Also yes, using code interpreter, i am on mobile rn, will share the chat in a few hrs to discuss

@firstuserhere Don't think it was trained with a different tokenizer (well, I don't have any information on this).

But since GPT is outputting code and not ASCII tokens in the code interpreter mode, I'm assuming it can (potentially) be better at generating ASCII art. Like GPT4 is famously bad at locating letters in words. With code, that's not an issue.

It just "needs" to understand how to draw things with functions instead of developing a 2D understanding of the relationship between arbitrary groups of tokens.

(I'm asking about code interpreter because there's no code section in your screenshot)

@jgyou One shot here, with matplotlib: https://chat.openai.com/share/731084cc-622c-4e73-b1c0-574028c51aa4

But since GPT is outputting code and not ASCII tokens in the code interpreter mode, I'm assuming it can (potentially) be better at generating ASCII art. Like GPT4 is famously bad at locating letters in words. With code, that's not an issue.

It just "needs" to understand how to draw things with functions instead of developing a 2D understanding of the relationship between arbitrary groups of tokens.

agreed

@firstuserhere Yeah it is not yet super consistent. And low-key convo led to fine (much better than before) but not stunning results, like this.

just had a realization that the amazing code interpreter solution is probably due to training set contamination with code from code golf challenges https://gist.github.com/gcr/1075131

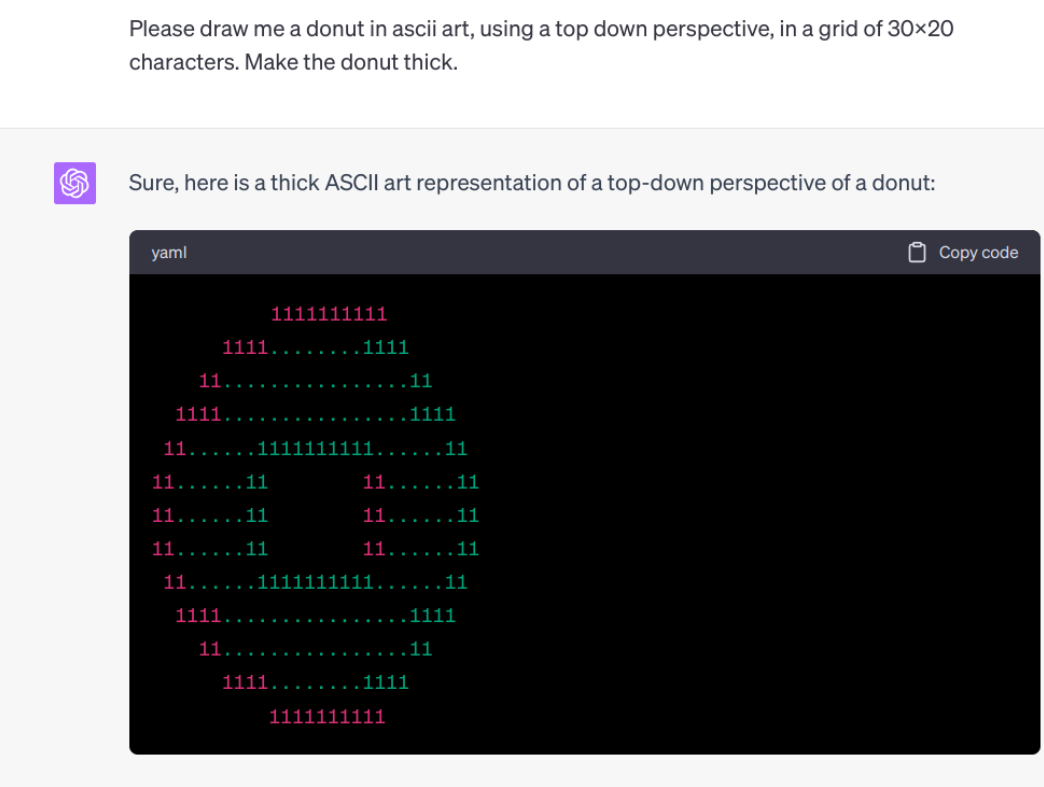

This now works for me with the May 12th version of GPT4

(0-shot, no pre-prompting for context or anything)

@jgyou Even simple prompts work though they are less pretty:

+1 for the choice of letter, it does look yummmmmmy:

@jgyou Okay look, I used your top prompt with very minor modifications which don't actually change the meaning of the prompt, but the result isn't robust. Can confirm this is the May 12 version

@jgyou very nice. So you make it add a description of a donut into the context before asking. I was hoping to see a long time ago in the comments, triviality. Interesting that you use a top view. Can you try a few modifications, such as drawing a pretty or tasty donut, or changing the grid size?

@firstuserhere I narrowed it down to a smaller size because it tends to lose focus and expands the hole forever with larger grids. The context seems important!

I got better results with long context but got bored waiting on inference to write out a novel about donuts every time.

@jgyou I think I understand what it’s doing now. I’ve tried several times and it likes doing essentially a circle-like shape… to us. It’s actually viewing the blank in the centre as the hole. So basically, it’s a very thin walled donut!