On August 26th, Eliezer tweeted

(https://twitter.com/ESYudkowsky/status/1563282607315382273):

In 2-4 years, if we're still alive, anytime you see a video this beautiful, your first thought will be to wonder whether it's real or if the AI's prompt was "beautiful video of 15 different moth species flapping their wings, professional photography, 8k, trending on Twitter".

Will this tweet hold up? (The part about AI video generation, not about whether we'll all be dead in 2-4 years.) Giving max date range to be generous.

This market resolves YES if at close (end of 2026) my subjective perception is that this was a good take--e.g., AI-generated video really is that good--and NO if it seems like Eliezer was importantly wrong about something, e.g., AI-generated video still sucks, or still couldn't be the cause for serious doubt about whether some random moth footage was made with a camera or not.

I reserve the right to resolve to an early YES if it turns out Eliezer was obviously correct before the close date. I won't dock points if he ends up having been too conservative, e.g., a new model comes out in 6 months with perfect video generation capabilities.

I guess this market resolves N/A if we all die, but, well, y'know.

Betting policy: I will not bet in this market (any more than I already have, and I've long sold all my shares).

@HenriThunberg It's getting closer but not quite there. It doesn't have the full-body motion the original video has, just the wings moving. The resolution is also clearly lower.

@EvanDaniel That said, I don't think it has to be perfect before my first thought is in fact that and I have stop and look at it momentarily to decide.

@EvanDaniel agreed, I think people have the burden of proof a bit flipped compared to what the description says.

To me, it doesn't seem to be about "Can I tell if it's AI?", but rather "Am I sure this is not AI?", which makes one hell of a difference.

@HenriThunberg Right. Am I sure it's not AI at a glance or do I have to think about it? Here I think it looks "off" at a glance, but we might be close enough that we're arguing about "if I'm paying attention vs. kinda passively consuming", which means... it's very close to good enough for my read of this question's standards. At least by my judgment. I think it'll be pretty obvious within 6-12 months from now, if we don't move the goalposts too much.

Based on the video that was chosen in the tweet, it seems that prediction was "AI will be able to make highly intricate and photorealistic clips that a layperson cannot distinguish from reality". If the prediction was supposed to be "AI will be able to make ordinary clips that could be mistaken as real at a glance", then why was the moth video chosen? Notice that the tweet says "anytime you see a video this beautiful".

There's also another point which I don't think anyone paid attention to, which is the "trending on twitter" part of the prompt. I don't know if this was a joke or if the author thought that AI would be powerful enough to carry out psyops. If it's the latter, that does add weight to the stricter interpretation of the tweet.

your first thought will be to wonder

Your first thought being to wonder is entirely consistent with being able to eventually figure it out with effort.

the "trending on twitter" part of the prompt. I don't know if this was a joke

Image generators of the era of the tweet definitely produced better output when text like that was included in the prompt. Neither a joke nor anything about psyops, it falls in the same category as the "8K" piece of the prompt -- note that nothing here is in 8K resolution.

@EvanDaniel Ok but if we're going to take the "your first thought will be to wonder" line literally, most of the general population is not doubting whether the videos they see online are real. It's certainly not their first thought, at least.

@ItsMe So if the question is "Are AI videos indistinguishable from reality", the market would currently resolve NO.

If it's "Are AI videos not easily distinguishable from reality", the market would currently resolve YES,

And if it's "Are AI videos causing the general public to doubt whether the videos they see are real" the market would currently resolve NO.

I don't think the answers to these questions are going to change in the next year. So the market will probably resolve according to whichever interpretation journcy picks.

@ItsMe looking back at older comments, journcy says

"The central claim Eliezer makes IMO is not so much about the quality of videos being generated as it is the mindset people will be in given what capabilities are available and in use. Where, yeah, "people" is kinda vague; probably something closer to "person following EY on Twitter" than "literally any human alive" or even "any English-speaking person," but also if it's literally just LessWrongers or whatever being paranoid that might be kind of on the fence for me."

So I think it's interpretation #3 which counts here. But with tech-literate people instead of "general public". Still I think it would currently resolve NO. When a typical computer science major sees a video on Twitter, for example, he does not normally question if it's real.

@ItsMe If you mean, is this interpretation correct:

And if it's "Are AI videos causing the general public to doubt whether the videos they see are real" the market would currently resolve NO.

I'll reiterate that "the general public" is not quite the demographic I have in mind, but that I also haven't made explicit even in my head exactly what demographic I do have in mind. I'm mostly trying to not think about it and preserve my ability to look at the Tweet out of the context of this market existing.

Since this market is getting so big (almost 1 mil mana volume) I would suggest adding this sort of clarification to the description. I expect that most people who see this market would just interpret "does AI = moth video". It sounds like a pedantic clarification to make, but because the margins have become so small, the interpretation now matters more than the actual state of AI.

@HenriThunberg I'm kind of amazed how much progress has slowed down and how far this is 18 months from Sora 1!

Idea: use AI to make 100 videos of 15 moths flapping their wings. You can use different prompts, but they have to be short and simple, like in the tweet's example Of the 100 videos, pick the best one as the contender. Then, without editing it, show the contender and the original moth video to 100 people, and ask them which one is real and which is AI. If less than 90 of them get it right, then this market should resolve YES. Otherwise it should resolve NO.

What do y'all think

Possible future extrapolation:

In 2-4 years, if we're still alive, anytime you see something IRL this beautiful, your first thought will be to wonder whether it's real or if your VR/AR glasses AI prompt was "beautiful scene of a moth flapping its wings, professional photography, 8k, trending on Twitter"

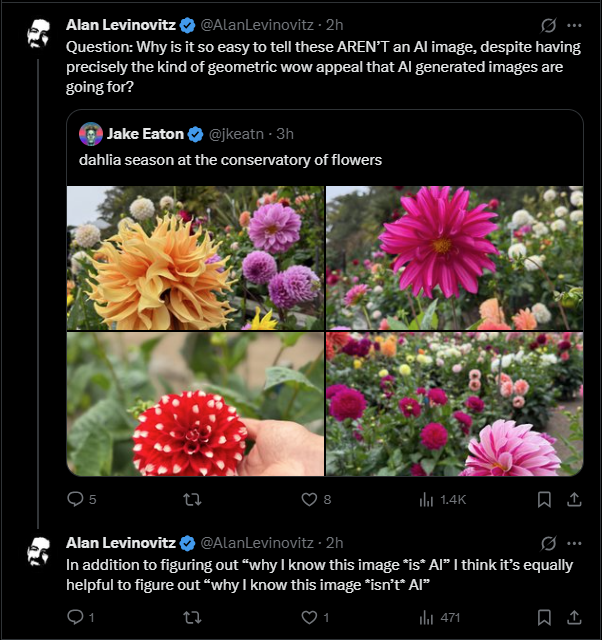

This Alan Levinowitz tweet opens up an interesting attack surface for this market. Maybe over the next few years we'll actually get better at spotting the uncanny valley!

@JoshuaWilkes I think the answer to Levinovitz's question might be that he thinks it is easy to tell that those images aren't AI because the caption says that they aren't AI

@galaga agree. At sub thumbnail size I would've guessed those were AI. Full size I think AI would've loved to bokeh the shit out of those backgrounds but I bet you could get that look with the right prompt

@galaga Thank you for confirming that we are currently not in the "casually beat ultra high quality/effort real videos" stage of AI gen, even for series of short clips. Though I wouldn't be surprised by someone who put in a lot of effort being able to do it with AI.

@galaga is there a concise way to specify in the prompt that the wings are being distorted by air resistance due to the high pre-slo-mo speed of wing movement, such that they resemble human sized fabric sheets moving at human speeds? That's really the only thing missing for me

@TheAllMemeingEye dude their wings are clipping into each other. A kindergartener could tell that's AI.